Lishuang Zhan | 詹李双

I am currently a Ph.D student (since fall, 2021) in School of Informatics, Xiamen University, advised by Prof. Shihui Guo.

My research focuses on natural human-computer interaction, flexible wearables, and multimodal sensing.

Education

| 2021-now | Xiamen University, Successive Master-Doctor in Computer Science and Technology |

| 2017-2021 | Xiamen University, Bachelor in Digital Media Technology |

News

| 07/2024: Our paper SATPose on multimodal 3D human pose estimation is accepted to ACM MM 2024. |

| 02/2024: Our paper Loose Inertial Poser on motion capture with loose-wear IMUs is accepted to CVPR 2024. |

| 10/2023: Our paper TouchEditor on flexible text editing system is accepted to Ubicomp/IMWUT 2024. |

| 06/2023: Our paper Touch-and-Heal on data-driven affective computing is accepted to Ubicomp/IMWUT 2023. |

| 01/2023: Our paper Touchable Robot Dog on human-robot-dog tactile interaction is accepted to ICRA 2023. |

| 10/2022: Our paper Handwriting Velcro on flexible text input system is accepted to Ubicomp/IMWUT 2023. |

| 08/2022: Our paper Sparse Flexible Mocap on sparse joint tracking is accepted to TOMMCCAP 2023. |

| 10/2021: Our paper MSMD-VisPro on data processing and visualization won the Best Poster Paper on ChinaVR 2021. |

Publications

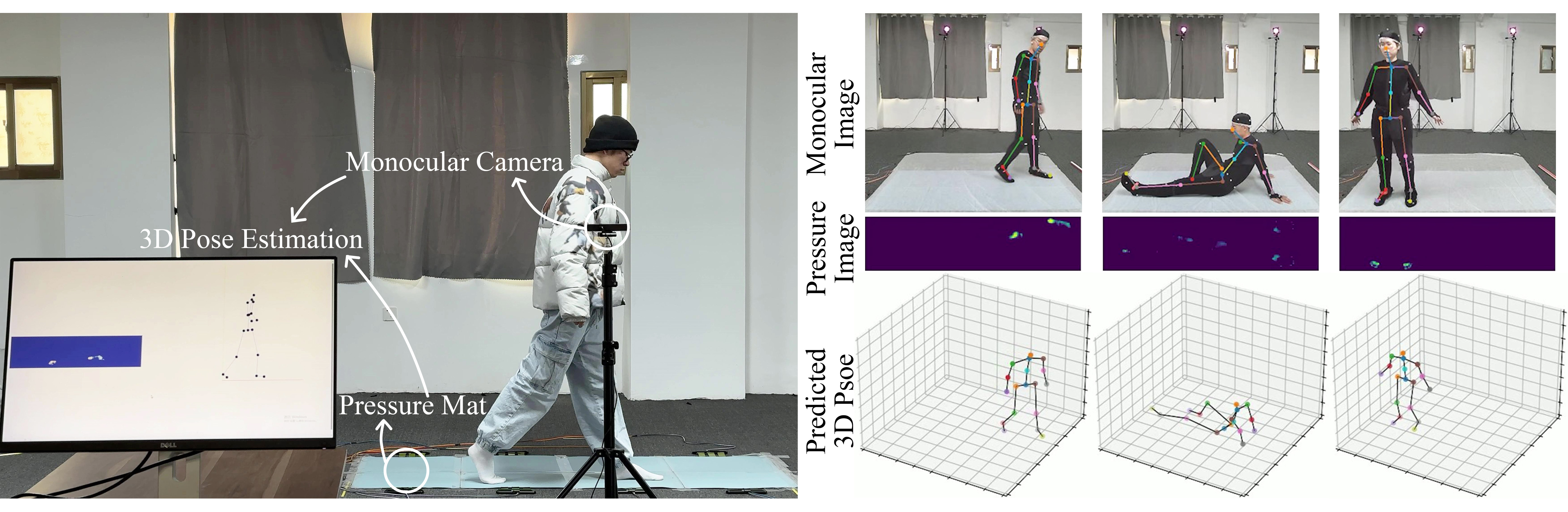

| SATPose: Improving Monocular 3D Pose Estimation with Spatial-aware Ground Tactility Lishuang Zhan, Enting Ying, Jiabao Gan, Shihui Guo*, Boyu Gao, Yipeng Qin (* corresponding author) Proceedings of the 32nd ACM International Conference on Multimedia (ACM MM), 2024 SATPose is a novel multimodal approach for 3D human pose estimation to mitigate the depth ambiguity inherent in monocular solutions by integrating spatial-aware pressure information. |

| Loose Inertial Poser: Motion Capture with IMU-attached Loose-Wear Jacket Chengxu Zuo, Yiming Wang, Lishuang Zhan, Shihui Guo*, Xinyu Yi, Feng Xu, Yipeng Qin (* corresponding author) Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), 2024 Loose Inertial Poser is a novel motion capture solution with high wearing comfortableness by integrating four Inertial Measurement Units (IMUs) into a loose-wear jacket. |

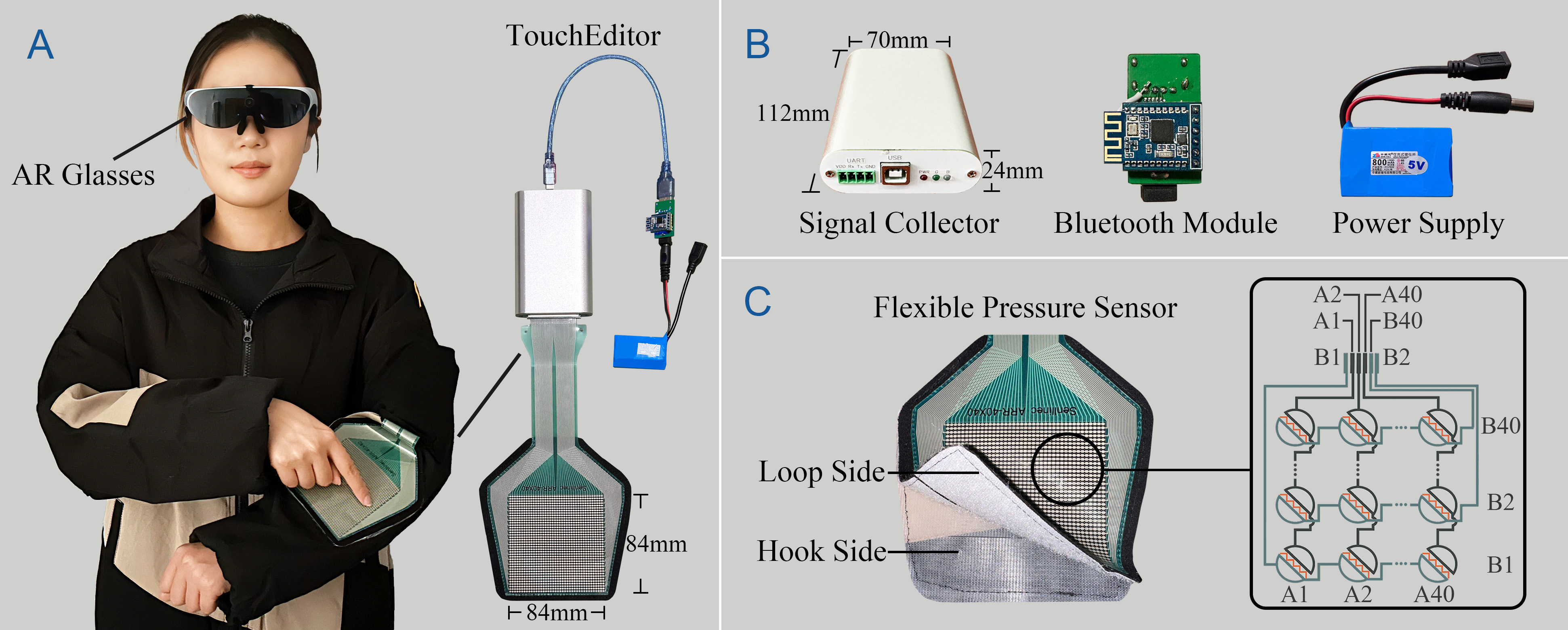

| TouchEditor: Interaction Design and Evaluation of a Flexible Touchpad for Text Editing of Head-Mounted Displays in Speech-unfriendly Environments Lishuang Zhan, Tianyang Xiong, Hongwei Zhang, Shihui Guo*, Xiaowei Chen, Jiangtao Gong, Juncong Lin, Yipeng Qin (* corresponding author) Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (Ubicomp/IMWUT), 2024 TouchEditor is a novel text editing system for HMDs based on a flexible piezoresistive film sensor, supporting cursor positioning, text selection, text retyping and editing commands (i.e., Copy, Paste, Delete, etc.). |

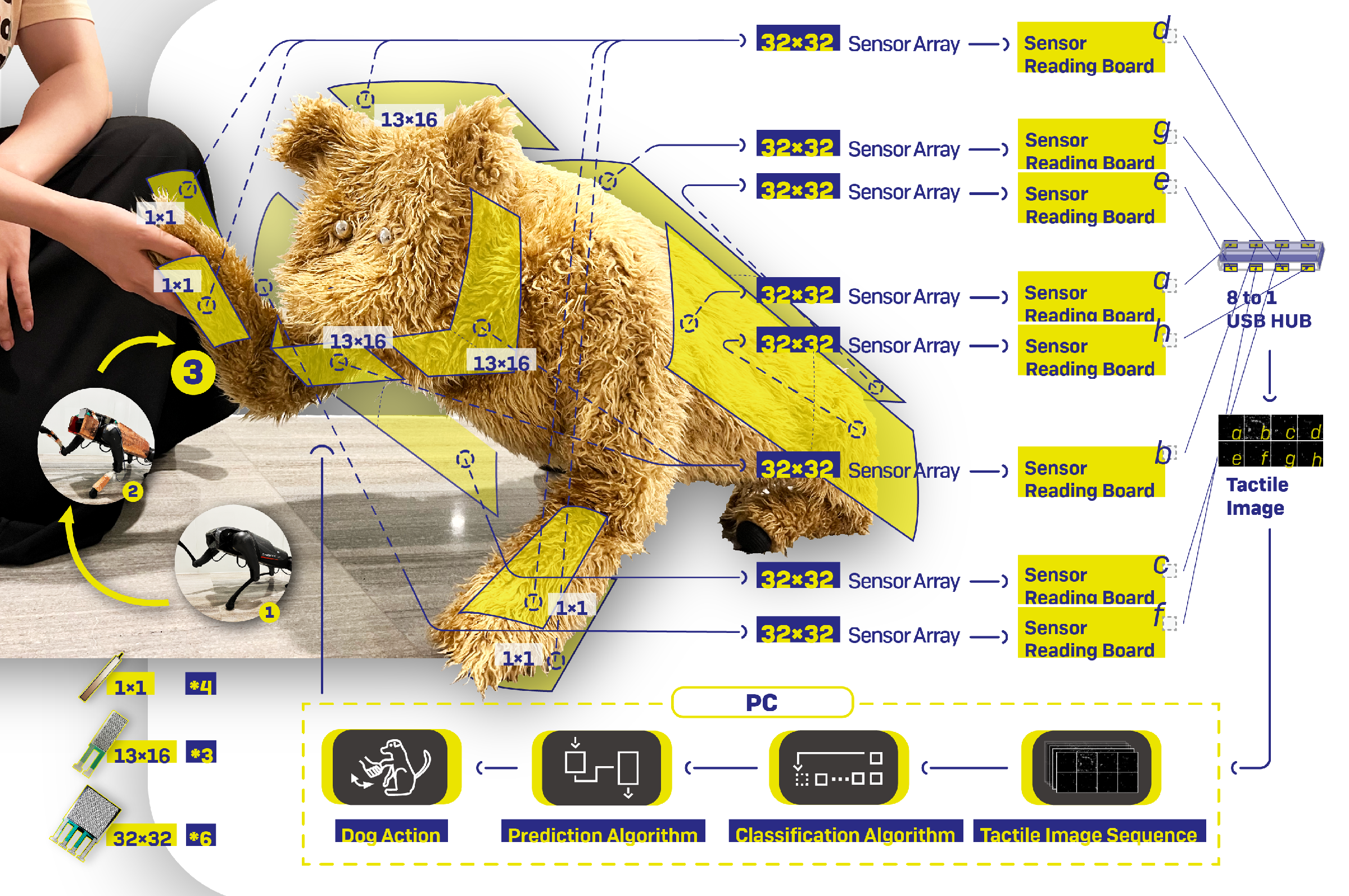

| Touch-and-Heal: Data-driven Affective Computing in Tactile Interaction with Robotic Dog Shihui Guo*, Lishuang Zhan*, Yancheng Cao, Chen Zheng, Guyue Zhou, Jiangtao Gong (* co-first author with my advisor) Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (Ubicomp/IMWUT), 2023 We propose a data-driven affective computing system based on a biomimetic quadruped robot with large-format, high-density flexible pressure sensors, which can mimic the natural tactile interaction between humans and pet dogs. |

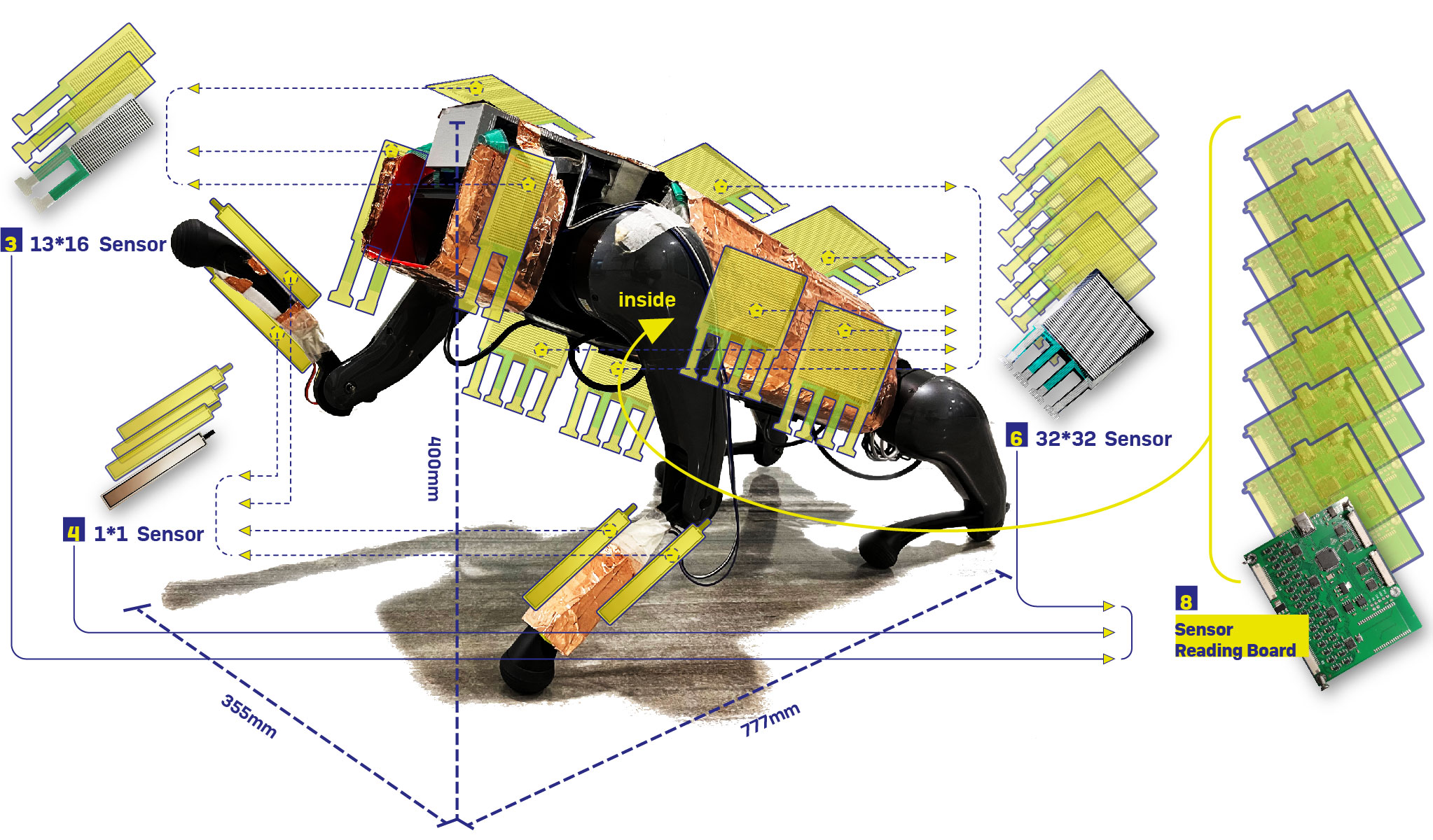

| Enable Natural Tactile Interaction for Robot Dog based on Large-format Distributed Flexible Pressure Sensors Lishuang Zhan, Yancheng Cao, Qitai Chen, Haole Guo, Jiasi Gao, Yiyue Luo, Shihui Guo, Guyue Zhou, Jiangtao Gong* (* corresponding author) IEEE International Conference on Robotics and Automation (ICRA), 2023 In this paper, we design and implement a set of large-format distributed flexible pressure sensors on a robot dog to enable natural human-robot tactile interaction. |

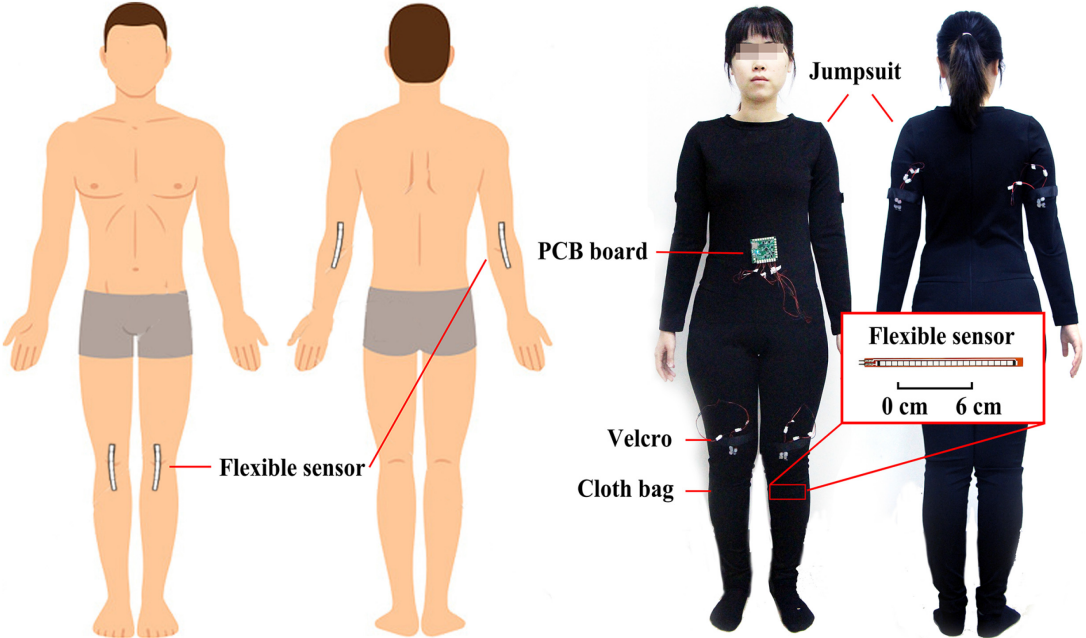

| Full-body Human Motion Reconstruction with Sparse Joint Tracking Using Flexible Sensors Xiaowei Chen, Xiao Jiang, Lishuang Zhan, Shihui Guo*, Qunsheng Ruan, Guoliang Luo, Minghong Liao, Yipeng Qin (* corresponding author) ACM Transactions on Multimedia Computing, Communications and Applications (TOMMCCAP), 2023 In this work, we propose a novel framework to accurately predict human joint moving angles from signals of only four flexible sensors, thereby achieving human joint tracking in multi-degrees of freedom. |

| Handwriting Velcro: Endowing AR Glasses with Personalized and Posture-adaptive Text Input Using Flexible Touch Sensor Fengyi Fang, Hongwei Zhang, Lishuang Zhan, Shihui Guo*, Minying Zhang, Juncong Lin, Yipeng Qin, and Hongbo Fu (* corresponding author) Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies (Ubicomp/IMWUT), 2023 Handwriting Velcro is a novel text input solution for AR glasses based on flexible touch sensors, which can easily stick to different body parts, thus endowing AR glasses with posture-adaptive handwriting input. |